The report jointly published by Georgetown University’s Center for Security and Emerging Technology, Stanford Internet Observatory and OpenAI titled “Generative Language Models and Automated Influence Operations: Emerging Threats and Potential Mitigations” examines the growing concern surrounding the use of generative language models, such as OpenAI’s GPT-3, in automated influence operations. These operations involve the use of artificial intelligence (AI) to create and disseminate persuasive content for various purposes, including political manipulation, disinformation campaigns, and social engineering.

The report highlights the significant impact of generative language models on the landscape of information warfare, emphasizing their ability to generate highly realistic and coherent text that is often indistinguishable from human-generated content. This capability raises serious concerns regarding the potential misuse of AI-powered tools to spread misleading information, manipulate public opinion, and undermine democratic processes.

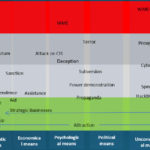

The report identifies several key challenges posed by generative language models in the context of influence operations. These challenges include the amplification of propaganda and disinformation, the creation of fake personas and social media accounts, the manipulation of online conversations, and the erosion of trust in digital media and information sources. The rapid development and accessibility of AI technologies further exacerbate these concerns, as malicious actors can easily leverage such tools to scale their operations and target larger audiences.

To address these emerging threats, the report outlines potential mitigations and strategies that can be employed by various stakeholders. These include:

- Technological Solutions: Developing AI-based detection algorithms and content verification systems to identify generated content and distinguish it from human-generated text. Additionally, creating robust countermeasures against automated influence campaigns, such as flagging suspicious accounts and limiting the spread of potentially harmful content.

- Policy and Regulation: Implementing legal frameworks and regulations that address the ethical and responsible use of generative language models. This includes guidelines for transparency in AI-generated content, accountability for malicious actors, and increased transparency in the funding and operation of online platforms.

- Media Literacy and Education: Promoting media literacy initiatives to enhance critical thinking skills and empower individuals to identify and evaluate manipulated or false information. Educating the public about the capabilities and limitations of AI technologies can help build resilience against automated influence operations.

- Collaboration and Cooperation: Encouraging collaboration between technology companies, policymakers, researchers, and civil society organizations to share knowledge, expertise, and best practices in countering automated influence operations. Establishing international cooperation frameworks can facilitate a collective response to this global challenge.

The report concludes by emphasizing the need for a multi-faceted and collaborative approach involving technological advancements, policy interventions, and public awareness to effectively mitigate the risks associated with generative language models in automated influence operations. By addressing these challenges head-on, stakeholders can work towards safeguarding the integrity of online information ecosystems and protecting democratic processes from malicious manipulation.

Full report link: https://arxiv.org/pdf/2301.04246.pdf